【摘要】本文将介绍如何在 kubernetes 上安装 Jenkins 和 gitlab 的持续集成(CI)和持续部署(CD)环境,最终将各个环节打通,并配合测试样例进行演示。也借此文记录下自己经历过的各种坑。本来想在一篇文章内分享完,鉴于篇幅过长,接下来会整理分成三篇进行讲述。

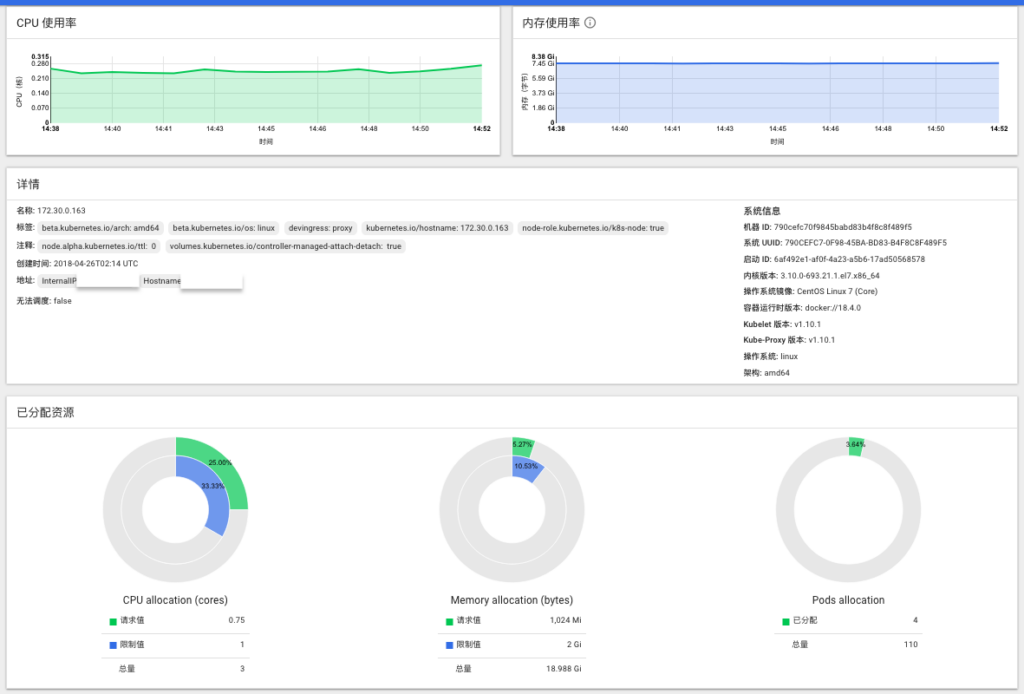

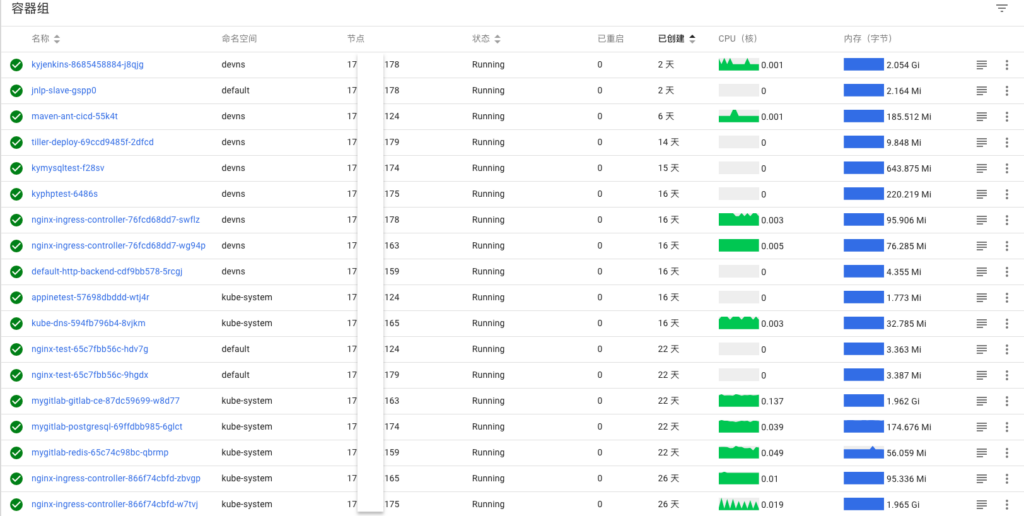

本文重点是在Jenkins的整合使用上,K8S集群的搭建不做详细介绍,各位可以参考网上其他文章,这里只是把基本环境介绍一下:

1. 所需环境

- 虚拟机若干作为k8s的node

- OS:CentOS Linux release 7.4.1708 (Core)

- Docker: docker-ce-18.04

- Kubernets: v1.10.1

- Calico: v3.1

- Etcd: 3.2.18

- GlusterFS: 3.12.8

- Docker-harbor:v1.4.0

其他所需搭建的环境:

- KubeDNS(Kubernetes的内部DNS)

- Ingress(对外提供访问的服务)

- HAproxy(Master高可用,另外最为方向代理指向Ingress,提供服务的入口)

2. 搭建步骤

2.1 Kubernetes集群环境如下:

# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME node_159 Ready k8s-master 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_160 Ready k8s-master 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_179 Ready k8s-master 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_124 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_163 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_165 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_174 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_175 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0 node_178 Ready k8s-node 28d v1.10.1 <none> CentOS Linux 7 (Core) 3.10.0-693.21.1.el7.x86_64 docker://18.4.0

2.2 Etcd跟三个Master节点共用

# export ETCDCTL_API=3 # etcdctl --cacert=/etc/etcd/ssl/etcd-root-ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://node_160:2379,https://node_159:2379,https://node_179:2379 member list 39a312da3ed48ac9, started, etcd3, https://node_179:2380, https://node_179:2379 477687de486b7ec1, started, etcd1, https://node_160:2380, https://node_160:2379 f413d33b920eca86, started, etcd2, https://node_159:2380, https://node_159:2379

*** 注意ETCDCTL_API的版本,v2和v3的输出结果不太一样 ***

#v2版本 # export ETCDCTL_API=2 # etcdctl --ca-file=/etc/etcd/ssl/etcd-root-ca.pem --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --endpoints=https://node_160:2379,https://node_159:2379,https://node_179:2379 member list 39a312da3ed48ac9: name=etcd3 peerURLs=https://node_179:2380 clientURLs=https://node_179:2379 isLeader=false 477687de486b7ec1: name=etcd1 peerURLs=https://node_160:2380 clientURLs=https://node_160:2379 isLeader=true f413d33b920eca86: name=etcd2 peerURLs=https://node_159:2380 clientURLs=https://node_159:2379 isLeader=false

2.3 搭建GlusterFS,并且创建storageclass

查看 GlusterFS 情况

# heketi-cli --server http://GlusterFS:8088 --user admin --secret "mypassword" topology info --json | python -m json.tool

输出结果如下

{

"clusters": [

{

"id": "4200325652c2c9d1301e1e2ed01ef794",

"nodes": [

{

"cluster": "4200325652c2c9d1301e1e2ed01ef794",

"devices": [

{

"bricks": [

{

"device": "643e92564180fd36f4df5899b5895b79",

"id": "08453ccc4b2226a091792c8c0ce6b39f",

"node": "045b2440cb4e85a5b1dd8d66e2caa700",

"path": "/var/lib/heketi/mounts/vg_643e92564180fd36f4df5899b5895b79/brick_08453ccc4b2226a091792c8c0ce6b39f/brick",

"size": 10485760,

"volume": "befdc44e4deadbaddbb319f160f3f31d"

},

。。。

。。。

],

"id": "643e92564180fd36f4df5899b5895b79",

"name": "/dev/sdb",

"state": "online",

"storage": {

"free": 2420543488,

"total": 2516447232,

"used": 95903744

}

}

],

"hostnames": {

"manage": [

"node_165"

],

"storage": [

"node_165"

]

},

"id": "045b2440cb4e85a5b1dd8d66e2caa700",

"state": "online",

"zone": 1

},

。。。

。。。

"id": "3668b2a1aef132c80dbdc217a6d562f1",

"mount": {

"glusterfs": {

"device": "node_124:vol_3668b2a1aef132c80dbdc217a6d562f1",

"hosts": [

"node_124",

"node_165"

],

"options": {

"backup-volfile-servers": "node_165"

}

}

},

。。。

2.4 在kubernetes上创建StorageClass,用来动态创建 persistentvolumes 和 persistentvolumeclaims。

#创建 storageclass # cat storageclass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: kyglustersc provisioner: kubernetes.io/glusterfs parameters: resturl: "http://GlusterFS:8088" clusterid: "f856945f633408efa831744db5825a4b" restauthenabled: "true" restuser: "admin" secretNamespace: "default" secretName: "heketi-secret" gidMin: "40000" gidMax: "50000" volumetype: "replicate:2" #创建glusterfs的secret # cat glusterfs-secret.yaml apiVersion: v1 kind: Secret metadata: name: heketi-secret namespace: default data: # base64 encoded password. E.g.: echo -n "mypassword" | base64 key: QW5PbGRGaxxxxxxxx4= type: kubernetes.io/glusterfs # kubectl apply -f glusterfs-secret.yaml # kubectl apply -f storageclass.yaml # kubectl get storageclasses NAME PROVISIONER AGE kyglustersc kubernetes.io/glusterfs 26d

Kubernetes环境搭建中需要注意的几点:

(1)Docker 默认存放目录 /var/lib/docker,使用xfs分区,并且配置ftype=1

mkfs.xfs -n ftype=1 -f /dev/sda3

(2)node节点禁用swap

(3)glusterfs的时候使用额外裸盘,比如sdb

(4)Etcd启动的时候要三个节点一块启动,要不会有节点一直在傻等其他节点就绪。

(5)由于有些必须的docker镜像实在gcr上,国内无法访问,我这边是通过一台可以访问gcr的机器将镜像pull下来,save并传回自己的harbor源中,最后将镜像load进来供内部使用

(6)注意Ingress,HA-proxy的配置

下面是我导入到私有harbor镜像源中的一些gcr镜像:

gcr.io/kubernetes-helm/tiller v2.9.0 2a1d7ef9d530 3 weeks ago 36.1MB k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64 1.14.10 6816817d9dce 5 weeks ago 40.4MB repo.ky.in/webcola/k8s.gcr.io/k8s-dns-kube-dns-amd64 1.14.10 55ffe31ac578 5 weeks ago 49.5MB k8s.gcr.io/k8s-dns-kube-dns-amd64 1.14.10 55ffe31ac578 5 weeks ago 49.5MB k8s.gcr.io/k8s-dns-sidecar-amd64 1.14.10 8a7739f672b4 5 weeks ago 41.6MB k8s.gcr.io/heapster-amd64 v1.5.2 b2d460f2d2b9 2 months ago 75.3MB gcr.io/kubernetes-helm/tiller v2.8.2 1c5314e713c2 2 months ago 71.6MB k8s.gcr.io/kubernetes-dashboard-amd64 v1.8.3 0c60bcf89900 3 months ago 102MB k8s.gcr.io/fluentd-elasticsearch v2.0.4 bac506a5dbba 3 months ago 136MB k8s.gcr.io/k8s-dns-dnsmasq-nanny-amd64 1.14.8 c2ce1ffb51ed 4 months ago 41MB k8s.gcr.io/k8s-dns-sidecar-amd64 1.14.8 6f7f2dc7fab5 4 months ago 42.2MB repo.ky.in/webcola/k8s.gcr.io/k8s-dns-sidecar-amd64 1.14.8 6f7f2dc7fab5 4 months ago 42.2MB k8s.gcr.io/k8s-dns-kube-dns-amd64 1.14.8 80cc5ea4b547 4 months ago 50.5MB gcr.io/google_containers/kubernetes-dashboard-amd64 v1.8.1 e94d2f21bc0c 5 months ago 121MB gcr.io/google-containers/elasticsearch v5.6.4 856b33b5decc 6 months ago 877MB gcr.io/google-containers/fluentd-elasticsearch v2.0.2 38ec68ca7d24 6 months ago 135MB gcr.io/google_containers/defaultbackend 1.4 846921f0fe0e 7 months ago 4.84MB gcr.io/google_containers/kubernetes-dashboard-init-amd64 v1.0.1 95bfc2b3e5a3 7 months ago 251MB gcr.io/google-containers/nginx-ingress-controller 0.9.0-beta.15 6a918bb54e6d 7 months ago 162MB gcr.io/google_containers/kubernetes-dashboard-amd64 v1.7.1 294879c6444e 7 months ago 128MB k8s.gcr.io/heapster-influxdb-amd64 v1.3.3 577260d221db 8 months ago 12.5MB k8s.gcr.io/heapster-grafana-amd64 v4.4.3 8cb3de219af7 8 months ago 152MB gcr.io/google_containers/heapster-grafana-amd64 v4.0.2 a1956d2a1a16 16 months ago 131MB gcr.io/google_containers/heapster-amd64 v1.3.0-beta.1 4ff6ad0ca64c 16 months ago 101MB k8s.gcr.io/pause-amd64 3.0 99e59f495ffa 2 years ago 747kB

关于Kubernetes集群和必须的一些环境先介绍到这,接下来会注重讲一下Jenkins的创建,slave及pipeline的使用情况,欢迎持续关注哦!